In our world of complex computers, lightning-fast internet searches, and “smart” devices, it’s easy to take for granted the fundamental language these machines speak. It’s a language not of words, but of pure logic, built on simple concepts of TRUE and FALSE, ON and OFF, 1 and 0. But have you ever wondered who first dreamed up this powerful system?

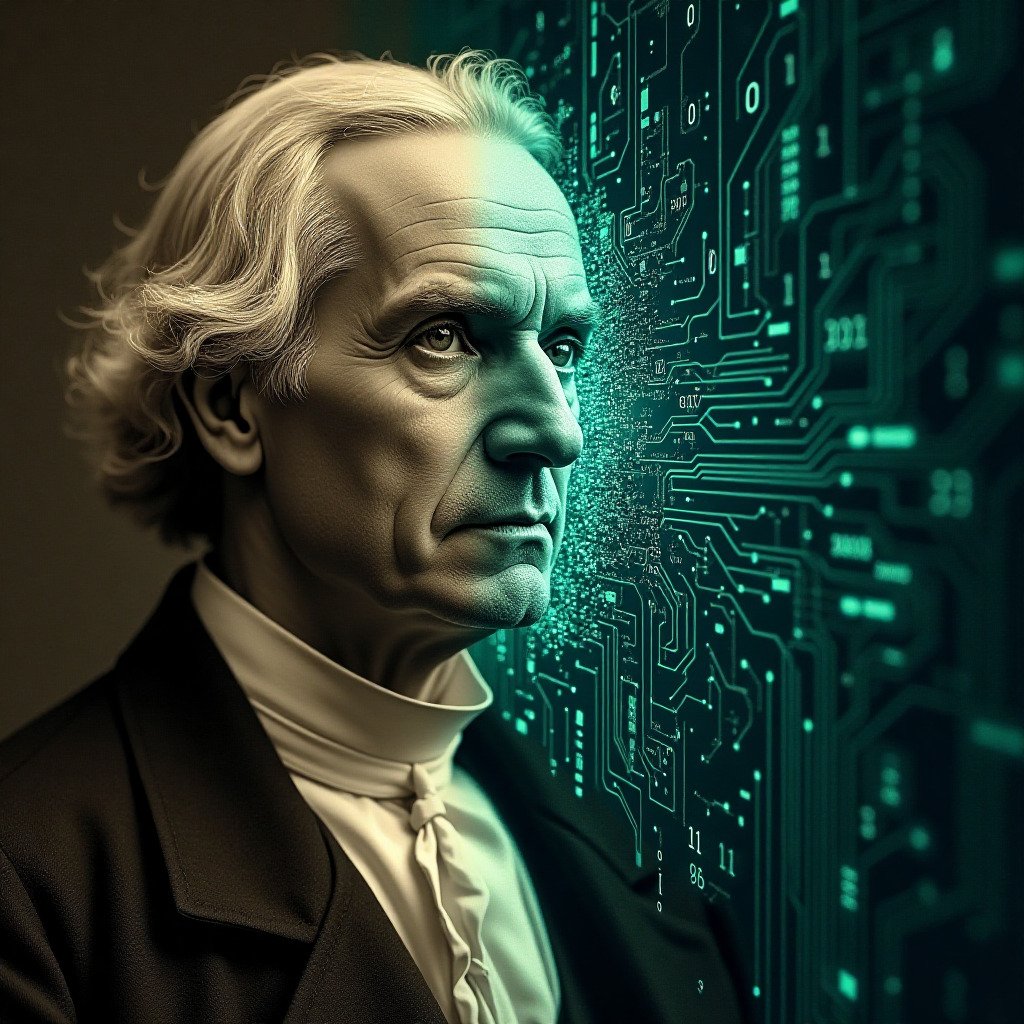

The answer takes us back to the 19th century, to a self-taught mathematician named George Boole. Welcome back to Sequentia, where today we explore the life and legacy of the man who, in essence, taught machines how to think.

Who Was George Boole?

Born in Lincoln, England, in 1815, George Boole was not a product of elite universities. He was the son of a shoemaker with a passion for science and mathematics, a passion he passed on to his son. Largely self-taught due to his family’s modest means, Boole devoured books on languages and mathematics, eventually becoming a schoolmaster and later, a respected professor.

But his true genius lay in his ability to see a profound connection between two seemingly separate fields: mathematics and human thought.

The “Aha!” Moment: Logic as Algebra

Boole’s groundbreaking work, published in his 1854 book An Investigation of the Laws of Thought, proposed a revolutionary idea: what if human logical reasoning could be expressed using algebraic symbols and equations?

He developed a new kind of algebra, which we now call Boolean Algebra, where variables don’t represent traditional numbers, but rather the truth values of propositions: TRUE or FALSE.

He introduced simple logical operators that are now the bedrock of all computing:

- AND: Combines two statements. “The sun is shining AND it is warm” is only TRUE if both parts are true.

- OR: Combines two statements. “It is raining OR it is cloudy” is TRUE if at least one part is true.

- NOT: Inverts a statement. If “The light is on” is TRUE, then NOT “The light is on” is FALSE.

Using these, Boole could build logical equations to analyze complex arguments and deduce their validity with mathematical certainty. He had turned the fluid nature of human logic into a precise, calculable system.

From Human Thought to Machine Language

For decades, Boole’s work remained a fascinating but somewhat niche area of mathematical logic. It wasn’t until nearly a century later, in the 1930s, that its true, world-changing potential was realized.

A young engineer and mathematician named Claude Shannon, while studying electrical switching circuits, had his own “Aha!” moment. He recognized that the binary nature of electrical circuits – where a switch is either ON (1) or OFF (0) – could be perfectly described by George Boole’s algebra, where a proposition is either TRUE (1) or FALSE (0).

This was the pivotal connection. Boole’s logic provided the mathematical framework for designing and simplifying the complex digital circuits that power everything from your smartphone and laptop to the vast networks of the internet. Every time you perform a search on Google, use a calculator, or play a video game, you are relying on billions of microscopic switches performing Boolean logic operations at incredible speeds.

George Boole didn’t live to see a computer, but he laid the essential intellectual foundation. He created the language of logic that, when paired with electronics, allowed humanity to build machines that can “reason,” calculate, and process information on a scale he could have never imagined.

So, the next time you tackle a logic puzzle, remember George Boole – the humble genius who saw the algebra in our thoughts and, in doing so, paved the way for the digital age.